Machine Learning and Neural Networks

Roberto Santana and Unai Garciarena

Department of Computer Science and Artificial Intelligence

University of the Basque Country

MLNN course

Recommended bibliography

- I. Goodfellow and Y. Bengio and A. Courville. Deep Learning. Chapter. 5. Machine Learning Basics.. MIT Press. 2016.

- K. P. Murphy. Machine learning. A probabilistic perspective. MIT Press. 2012.

- (Auxiliar bibliography) G. Thomas. Mathematics for Machine Learning. 2018.

What is machine learning?

Definitions

- The capacity of a computer to learn from experience, i.e., to modify its processing on the basis of newly acquired information. (Oxford dictionary).

- ML is the field of study that gives computers the ability to learn without being explicitly programmed (Samuel:1959).

- Make the computer to adapt to new circumstances and to detect and extrapolate patterns.

- A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E (Mitchell:1997).

I. Goodfellow and Y. Bengio and A. Courville. Deep Learning.. MIT Press. 2016.

What is machine learning?

Definitions

- A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E (Mitchell:1997).

Components

- The experience E.

- The task T .

- The performance measure P.

I. Goodfellow and Y. Bengio and A. Courville. Deep Learning.. MIT Press. 2016.

Experience E

- Most of ML algorithms that we will consider understand or represent experience as a dataset of examples.

- An example is a collection of features that have been quantitatively measured from some object or event that we want the ML system to process.

- We usually represent an example as a vector \( {\bf{x}} \in \mathcal{R}^n \), where each entry \(x_i\) is a feature.

- One common way of representing a database is with a design matrix, where each row represents an example and each column a feature.

- For supervised classification problems, the class or target variable is part of the experience.

I. Goodfellow and Y. Bengio and A. Courville. Deep Learning.. MIT Press. 2016.

Task T

- The task is the final problem the ML is intended to solve. The same task can be solved using different ML algorithms.

Examples of tasks

- Classification: The ML algorithm is asked to specify which of k categories some example belongs to.

I. Goodfellow and Y. Bengio and A. Courville. Deep Learning.. MIT Press. 2016.

Task T

More examples of tasks

- Structured output: Involves any task where the output is a vector (or other data structure containing multiple values) with important relationships between the elements.

- Anomaly detection: Given a set of objects or events, the task is to identify some of them as unusual or atypical.

- Synthesis and sampling: To generate new examples that are similar to those in the training data.

- Denoising: The algorithm is given a corrupted example \( \tilde{{\bf{x}}} \in \mathcal{R}^n \) and it should output the clean example \( {\bf{x}} \in \mathcal{R}^n \).

I. Goodfellow and Y. Bengio and A. Courville. Deep Learning.. MIT Press. 2016.

Performance Measure P

- A quantitative measure of the performance of the ML algorithm. Usually, P is specific to the task T.

Examples of performance measures

- Classification: Usually, the accuracy (proportion of examples for which the model produces the correct output).

- Classification: Also, the error rate (proportion of examples for which the model produces the incorrect output).

- Regression: A measure of the distance between the prediction, and the target variable, for example the mean squared error .

- Denoising: The amount of corruption that has been removed from the original example.

I. Goodfellow and Y. Bengio and A. Courville. Deep Learning.. MIT Press. 2016.

Classifiers

The three components of learning algorithms

- Representation: Choosing a representation for a classifier influence to a large extent the set of classifiers that it can learn. This set is called the hypothesis space of the learner.

- Evaluation: To distinguish between good and poor classifiers at least one criterion is required that is usually evaluating using an objective function or scoring function.

- Optimization: The search for the best classifier in the hypothesis space is usually posed as an optimization problem. The choice of the optimization technique is key to the efficiency of the learner.

P. Domingos. A few useful things to know about machine learning. Communications of the ACM, 55(10), 78-87. 2012.

The three components of learning algorithms

Representation |

Evaluation |

Optimization |

Instances |

Accuracy/Error rate |

Combinatorial optimization |

--K-nearest neighbor |

Precision and recall |

--Greedy search |

-- Support vector machines |

Squared error |

--Beam search |

Hyperplanes |

Likelihood |

--Branch-and-bound |

--Naive Bayes |

Posterior probability |

Continuous optimization |

--Logistic regression |

Information gain |

-Unconstrained |

Decision trees |

KL divergence |

--Gradient descent |

Set of rules |

Cost/Utility |

--Conjugate gradient |

--Propositional rules |

Margin |

--Quasi-Newton methods |

--Logic programs |

-Constrained |

|

Neural networks |

--Linear programming |

|

Graphical models |

--Quadratic programming |

|

--Bayesian networks |

Classification problems

Probability analysis

Let \( p({\bf{x}}) \) be a probability distribution defined on a discrete feature \( {\bf{x}} \). \( p({\bf{x}}) \) satisfies the following:

\[ p[{\bf{X}} = {\bf{x}}] = p({\bf{x}}) \]

\[ p({\bf{x}}) \geq 0 \; \; \forall {\bf{x}} \]

\[ \sum_{{\bf{x}}} p({\bf{x}}) = 1 \]

Classification problems

Probability analysis

- Let \( P(C_1) \) and \( P(C_2) \) be the probabilities we know a priori that an observation belongs to clases \( C_1 \) and \( C_2 \), respectively.

- \( P(C_i,{\bf{x}}) \) is the joint probability that \({\bf{X}} \) takes value \( {\bf{x}} \) and belongs to class \( C_i \)

- We define \( P({\bf{x}}|C_i) \) as the conditional probability that \( {\bf{X}} \) takes value \( {\bf{x}} \) given that it belongs to class \( C_i \)

Classification problems

Probability analysis

- We know that \( P(C_i,{\bf{x}}) = P({\bf{x}}|C_i) P(C_i) \) and \( P(C_i,{\bf{x}}) = P(C_i|{\bf{x}}) P({\bf{x}}) \).

- Therefore, \[ P(C_i|{\bf{x}}) = \frac{P({\bf{x}}|C_i) P(C_i)}{P({\bf{x}})} \]

- This expression is referred as Bayes's theorem.

- \(P(C_i) \) is known as the prior probability.

- \( P({\bf{x}}|C_i) \) is the class conditional probability of \(P({\bf{x}}) \) for class \( C_i \).

- \(P(C_i|{\bf{x}})\) is the posterior probability

Classification problems

Probability analysis

- The posterior probability \( P(C_i|{\bf{x}}) \) gives the probability of a sample belonging to class \( C_i \) once we have observed the feature vector \( {\bf{x}} \).

- The probability of misclassification is minimized by selecting the clas \( C_i \) having the largest posterior probability, i.e., \[ P(C_i|{\bf{x}}) > P(C_j|{\bf{x}}) \; \; \forall j \neq i. \]

Classification problems

Classifiers

- According to the largest posterior probability \( P(C_i|{\bf{x}}) \), a classifier provides a rule for assigning each point of the feature space to one of \(k\) classes.

- Therefore, a classifier divides the feature space into \( k \) decision regions \( \{ \mathcal{R}_1, \dots, \mathcal{R}_k \} \).

- We can extend the idea of using posterior probabilities for each class by defining a set of discriminant functions \( y_1({\bf{x}}), \dots, y_k({\bf{x}}) \) such that an input vector \( {\bf{x}} \) is assigned to class \( C_i \) if \[ y_i({\bf{x}}) > y_j({\bf{x}}) \; \; \forall j \neq i. \]

Supervised learning

Generative versus discriminative classifiers

- Generative: Provide a model of how the observations can be generated given the class.

- Discriminative: Does not provide a model but allow the discrimination of the observation according to the classes.

- Discriminative models learn the boundary between classes while generative models model the distribution of individual classes.

- Examples of generative models: naive Bayes.

- Examples of discriminative models: decision trees.

Supervised learning

Regressors and classifiers

C. M. Bishop. Neural Networks for Pattern Recognition. Oxford University Press. 2005.

P. Domingos. A few useful things to know about machine learning. Communications of the ACM, 55(10), 78-87. 2012.

Supervised learning

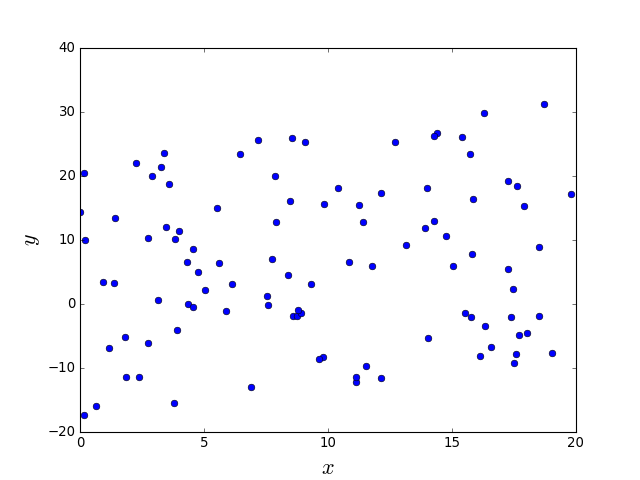

Regression

Supervised learning

Regression

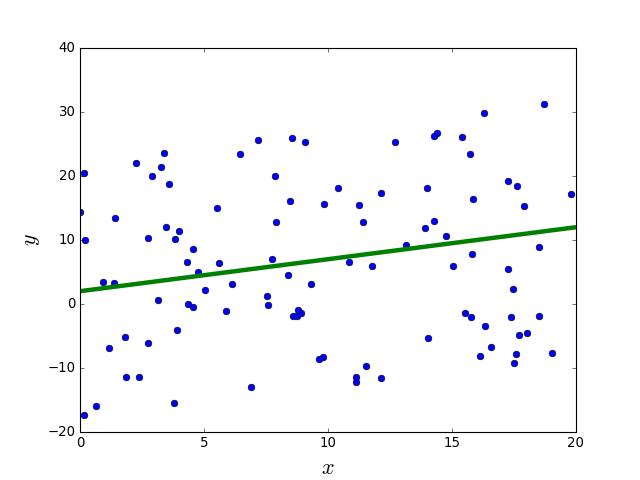

Supervised learning

Linear regression

A set of \(N\) tuples \( (x^1,y^1), \dots (x^N,y^N) \) is given, where \(y\) is the target or dependent variable and \(x\) is the covariate, independent variable or predictor. The task is to predict \(y\) given \(x\).

General regression model: \[ y = f(x) + \epsilon \]

where \( \epsilon \) is the irreducible error that does not depend on x.

Linear regression model: \[ f(x) = \beta_1 x + \beta_0 \]

Linear regression estimate: \[ \hat{y} = \hat{\beta_1} x + \hat{\beta_0} \]

The residual error is the difference between the prediction and the true value. \[ e^i = y^i - \hat{y}^i \]

K. P. Murphy. Machine learning. A probabilistic perspective. MIT Press. 2012.

Supervised learning

Linear regression

The mean squared error is usually used: \[ MSE = \frac{1}{N} \sum_{i=1}^N (y^i - \hat{y}^i)^2 \]

The parameters of the model that minimize this error are learned: \[ \arg \min_{\beta_0,\beta_1} \frac{1}{N} \sum_{i=1}^N (y^i - (\beta_1 x^i + \beta_0))^2 \]

After differentiating with respect to \( \beta_0,\beta_1 \) and equalling to \(0\), we get: \[ \hat{\beta_0} = \bar{y} - \hat{\beta_1}\bar{x}; \; \; \hat{\beta_1} = \frac{\sum_{i=1}^N x^i y^i - N \bar{x} \bar{y}} {\sum_{i=1}^N (x^i)^2 - N \bar{x}^2} \] where \(\bar{x}\) and \(\bar{y}\) are the mean of \(x\) and \(y\) as computed from the data.

K. P. Murphy. Machine learning. A probabilistic perspective. MIT Press. 2012.

Supervised learning

Multiple linear regression

In multiple linear regression we have multiple covariates, represented as a vector \({\bf{x}} \). The model is linear on the covariates.

Multiple linear regression model: \[ f(x) = \beta_0 + \beta_1 x_1 + \beta_2 x_2 + \dots + \beta_n x_n + \epsilon \]

Let \( {\bf{w}} \) be the model weight vector containing \(\beta\) values, , \( {\bf{w}}^T {\bf{x}} \) represents the inner or scalar product between the input vector \( {\bf{x}} \) and the weight vector.

Then, the multiple linear regression model in matrix form is expressed as: \[ y({\bf{x}}) = {\bf{w}}^T {\bf{x}} + \epsilon = \sum_{j=1}^{n} w_jx_j + \epsilon \]

Estimates of \(w\) are found by minimizing the MSE in a way similar to the case of a single covariate. That way the parameters of the model are learned.

K. P. Murphy. Machine learning. A probabilistic perspective. MIT Press. 2012.